By Rex Siu and Lauren Ivory

Generative AI is not advanced enough to replace human journalism and is the equivalent of a ‘tribute band’ compared to the real thing, a panel of researchers and journalists has said.

However, the panel discussion also heard the national broadcaster, ABC, is developing an AI model for future uses for its news service, ABC News, and is moving into the prototyping phase.

Central News invited leading industry experts to a talk at the University of Technology Sydney to share their views on the emerging technology and its implications on the present and future of journalism.

“I look at AI as a tribute band,” said Maryanne Taouk, an AI researcher and a digital and data journalist for ABC News. “They’re never going to be Kendrick Lamar on stage, but they are going to be someone that can rap in a Kendrick Lamar style.

“Whatever you’re going to get from [AI], it is going to have that kind of cringe underlying thing that it’s not actually real.”

Dr Michael Davis is a research fellow at the Centre for Media Transition (CMT), where he is leading a research project on AI’s implications for public interest journalism. He believes that despite the name, AI may not currently possess the intelligence many assume.

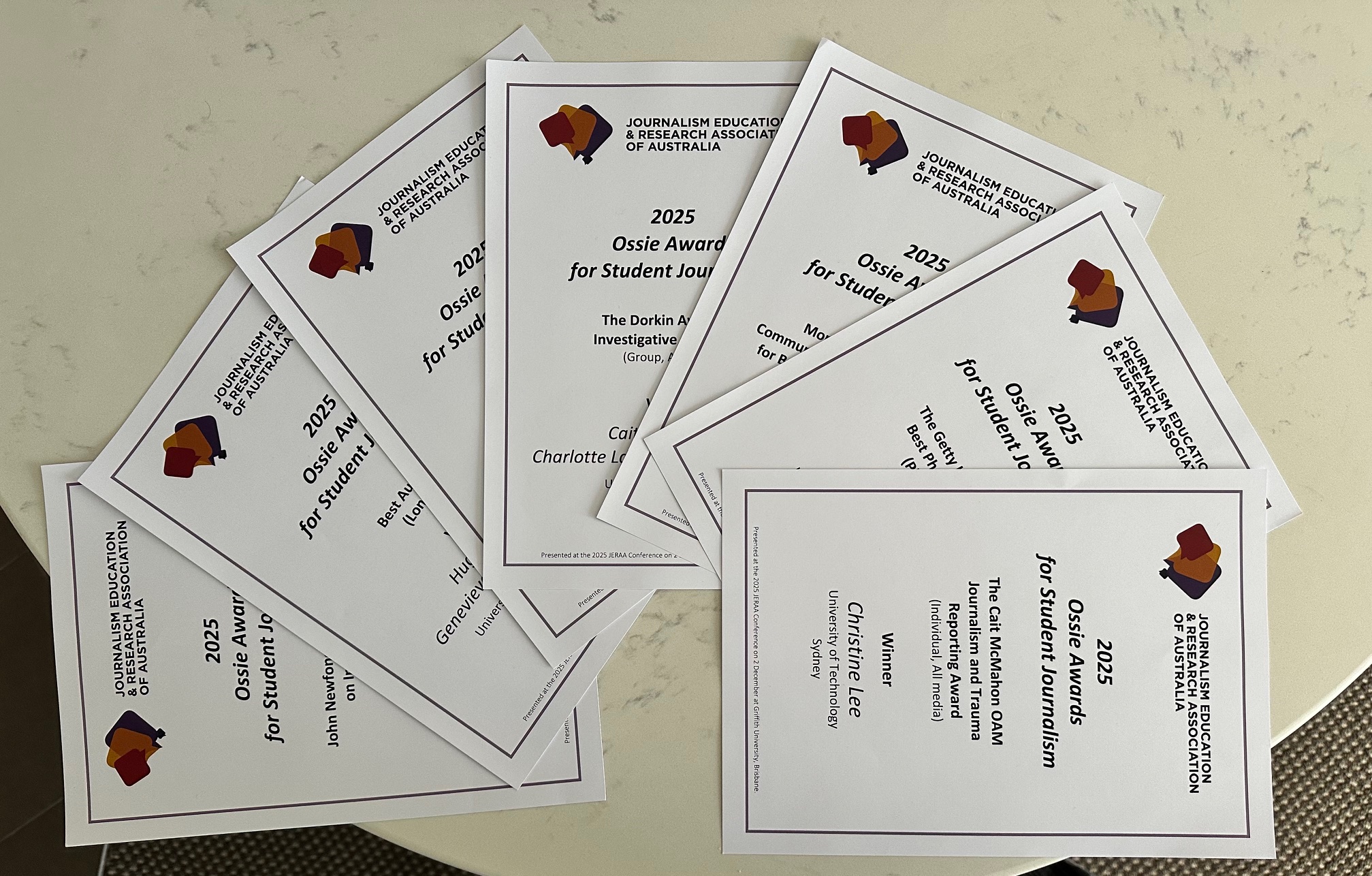

‘The Future of News: How Journalists are Using GenAI’ held at Central News. Photo: Lucas Tan / Central News

“Although it’s called artificial intelligence, it really isn’t intelligent,” said Dr Davis.

“It can’t think for you, it can’t generate incisive reasoning, to get behind what’s really happening out there in the world, for example, in political machinations and so on, it can’t do that for you.”

He said AI can be useful as a tool for journalists, for example, for data analysis or finding patterns.

Kevin Nguyen, an investigative journalist at ABC News, who has researched and investigated AI for almost a decade said: “When we talk about the future of newsrooms (with AI), we’re talking about, can you talk, function or sound convincingly like a human?”

The panellists agreed that, ultimately, AI models can only reach a certain level of capability and may never truly be able to replicate all human qualities and accuracy.

There were already approaches by newsrooms to cut costs before AI, Dr Davis said, but cost-effectiveness had yet to be their main focus. Instead, efforts to produce audience-oriented quality journalism continued.

“I think that’s not going to change (by AI). As long as that demand is there, then there’s going to be space for proper journalists doing their jobs,” he said.

CMT research fellow Dr Michael Davis leads a research project in GenAI implications in public interest journalism. Photo: Rodger Liang

Taouk added that AI can’t yet meet the demands of newsrooms and it is not cost-effective to upscale generative AI to the level needed for mainstream news outlets.

“So if it’s going to cost them $9,000 of tokens to work with this ChatGPT model, they’re not going to do it,” she said. “They’d rather just pay a salary… rather than pay that much for an untested, un-red-teamed model that could just go rogue and destroy their reputation.

“Because reputation in journalism is pretty much the biggest thing – being trustworthy. Being a source of information and not misinformation is one of the biggest things.

“And if you don’t have a source, like a generative AI model, that cannot guarantee that, then I don’t think it’s taking anybody’s jobs.”

The panellists also drew on the limitations of AI in addressing bias in language, particularly when it comes to issues of race, gender, and credibility, and how AI can perpetuate existing power structures and biases if they are trained on biased data.

Nguyen said it would be difficult for AI models to reach objectivity or neutrality because the general population, who feed data into the language models, are not thoroughly informed on topics such as bias, racism and sexism.

But what is GenAI, and what can it mean for Journalism?

According to Bard, a GenAI chatbot developed by Google: ‘Generative AI is a type of artificial intelligence that can create new content, such as text, images, audio, and video. It does this by learning patterns from existing data and then using this knowledge to generate new and unique outputs.’

According to Nguyen, “AI is simply a kind of output of really precise and complex mathematical probabilities”.

Recent breakthroughs in the field, such as ChatGPT, Bing and Midjourney, powered by their own Large Language Models (LLMs), have advanced the capabilities of generative AI. These models can now write poems, code programs, or compose music that is almost indistinguishable from human-created content with an elevated efficiency.

“Historically, we’ve been using what’s called ‘machine learning’ for a very, very long time,” Nguyen said.

“What AI is in the common public lexicon, at the moment, is large language models, which are quite different to what we have historically used.

“I would say it has kind of exploded the most in a year and a half… I feel like we’ve been blindsided by the growth of AI in the past year because of the constraints of our imagination. I’m really, really on the ball with technology, and I could not have predicted how fast this moves.”

Nguyen and Taouk are the current Australian fellows of JournalismAI, a world-leading research initiative aiming at responsible AI use in the field, which is run by the London School of Economics and Political Science’s media think tank, Polis, which researches key issues and policies of news and journalism in the UK and worldwide. In their fellowship, the two focus on identifying bias with AI adoption.

The project defined AI in its 2019 report as “a collection of ideas, technologies, and techniques that relate to a computer system’s capacity to perform tasks normally requiring human intelligence”.

JournalismAI conducted a survey on the use of AI technologies across 105 news outlets in 46 countries in the past years and published its latest report, ‘Generating Change’, in September.

“This is a critical phase again for news media around the world,” it reads, “our survey shows it [AI] is already changing journalism,” said director of Polis, Professor Charlie Beckett.

“Journalists have never been under so much pressure economically, politically and personally. GenAI will not solve those problems, and it might well add some, too.”

Professor Beckett added, though, that journalism will see a “golden age” with AI-driven tools as it will free up journalists to do more creative work which “brings exciting opportunities for efficiency and even creativity”.

How is AI being used in global journalism?

Newsrooms across the world are currently integrating generative AI into their systems. More than 75 per cent of global news organisations have adopted AI in at least one area of news gathering, production or distribution, as per the latest survey by Polis/LSE.

They are adopting different approaches to GenAI based on their sizes, mission and resources, the survey found.

“Some media organisations have a lot bigger budgets to devote to investigations and experiments of how generative AI might be useful; what the opportunities are. Other (smaller) newsrooms haven’t put much thought into it at all, (although) they’re aware of what’s happening,” said Dr Davis.

Inequalities in AI adoption in journalism are evident, Professor Beckett said in CMT’s podcast ‘Double Take’, with Global South newsrooms facing barriers due to resource constraints and language limitations.

“Rich North American news organisations like The New York Times or Wall Street Journal, they’ve got the resources, the technologists and so on, to take advantage of this tech, especially because this tech is happening so fast, so rapidly and evolving so quickly,” Prof Beckett told Dr Davis

“If you’re a local newsroom, for example, even in the Global North, you won’t have that resource. And certainly, if you’re in a non-English speaking country, for example, that’s erect a barrier because most of this technology is English.”

In his research and interviews with newsrooms, Dr Davis has learned the industry is cautiously exploring AI tools for their capabilities and impact on business models while acknowledging risks to ethical journalistic practice.

ABC News has been building and training models to create a chatbot that can be integrated into its online features, Taouk told the audience, emphasising the challenges it faced in doing so.

“It was worse than we thought it would be,” she said. “That’s something that we’ve learned from the outset. You have to take it by the hand and really go step by step through every process.”

Why AI cannot take away journalists’ jobs

“I would argue that the key challenge for journalism today is not to create more content,” Prof Beckett said on Double Take. He believes while AI can help with audience engagement, it is not good enough for creating original content or pumping truths.

“The key challenge is optimising the connection with your audience, calling what you want – the reader, the viewer, the user, whatever they are, to make sure that you are maximising the efficiency of giving people relevant, useful, enjoyable (and) stimulating journalism because the real problem is that people are overwhelmed with the amount of news out there.”

AI is getting a reputation for assisting in mundane tasks, such as reformatting and categorising. But, it still lacks the human elements that responsible and quality journalism requires.

“Empathy, connectivity, or relationship, judgment, curiosity, for example, and perhaps imagination,” Prof Beckett said.

In the Central News’ discussion, Dr Davis reiterated this point.

“I don’t think it’s going to take journalist’s jobs away,” he said.

Instead, he believes AI will create more job opportunities than before, likening it to what has happened with technology in the past.

“Just like when the computer was invented, some jobs disappeared, but what ended up happening is that other people ended up doing more jobs than what they did before, and I think that’s what’s going to happen,” he said.

“I’m sure [there are] a whole lot of uses that we haven’t even imagined yet.”

Taouk agreed and said new jobs are being created from AI advancements.

“What we love about journalism is not just the stories that are being told, but the people that are telling them,” she said. “And as clever as an LLM can be, I don’t think it’s going to get a soul.”

Generative AI tools, including Otter.ai, were used in the newsgathering process for this story.

To learn more about the risks and opportunities generative AI brings to news organisations around the world, listen to the latest episode of Double Take: ‘The Real Implications of Generative AI’

Video shot by Lucas Tan and Tatiya Kuleechuay, and edited by Lauren Ivory.